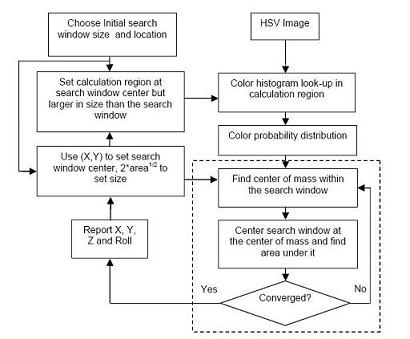

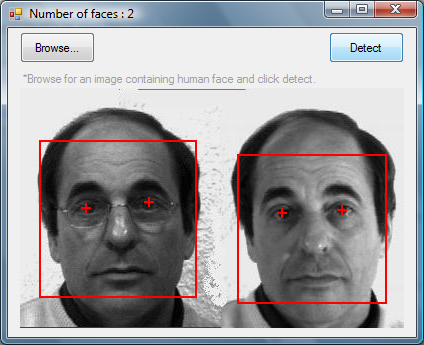

Terms like "Homography" often remind me how we still struggle with communication. #faces = face_tectMultiScale(gray, 1.3, 5)Įyes = eye_tectMultiScale(gray)Ĭv2.rectangle(img,(ex,ey),(ex+ew,ey+eh),(0,255,0),2)Ĭircles = cv2.HoughCircles(roi_gray2,cv2.HOUGH_GRADIENT,1,20,param1=50,param2=30,minRadius=0,maxRadius=0)Ĭv2.circle(roi_color2,(i,i),i,(255,255,255),2)Ĭv2.In order to keep parallel lines parallel for photogrammetry a bird’s eye view transformation should be applied. Gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) If anyone could help form one, that would be much appreciated!įace_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')Įye_cascade = cv2.CascadeClassifier('haarcascade_righteye_2splits.xml') I've looked at some other eye tracking methods, but I cannot form a general algorithm. Is it possible/better/easier for me to tell my program where the pupil is at the beginning? How can I make my program consistently find the center of the pupil, even when the eye moves? The houghcircle is often drawn in the wrong area. īut when I run my code, it cannot consistently find the center of the pupil. So for the first part of my code, I'm hoping to be able to track the center of the eye pupil, as seen in this video. I would determine where the pupil was in the eye by finding the distance from the center of the houghcircle to the borders of the general eye region.

I would then use houghcircle on the eye region to find the center of the pupil. My original plan was to use an eye haar cascade to find my left eye. If the pupil is in the top, bottom, left corner, right corner of the eye the robot would move forwards, backwards, left, right respectively. I am pointing a webcam at my face, and depending on the position of my pupil, the robot would move a certain way.

I am trying to build a robot that I can control with basic eye movements.

0 kommentar(er)

0 kommentar(er)